Text Classification using Python spaCy

In the previous two articles on text analytics, we’ve looked at some of the cool things spaCy can do in general. In this article, we will learn how to derive meaningful patterns and themes from text data. This is useful in a wide variety of data science applications: spam filtering, support tickets, social media analysis, contextual advertising, reviewing customer feedback, and more.

In this article, We’ll dive into text classification using spacy, specifically Logistic Regression Classification, using some real-world data (text reviews of Amazon’s Alexa smart home speaker).

Text Classification

Let’s look at a bigger real-world application of some of these natural language processing techniques: text classification. Quite often, we may find ourselves with a set of text data that we’d like to classify according to some parameters (perhaps the subject of each snippet, for example) and text classification is what will help us to do this.

The diagram below illustrates the big-picture view of what we want to do when classifying text. First, we extract the features we want from our source text (and any tags or metadata it came with), and then we feed our cleaned data into a machine learning algorithm that does the classification for us.

Importing Libraries

We’ll start by importing the libraries we’ll need for this task. We’ve already imported spaCy, but we’ll also want pandas and scikit-learn to help with our analysis.

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer,TfidfVectorizer

from sklearn.base import TransformerMixin

from sklearn.pipeline import PipelineLoading Data

Above, we have looked at some simple examples of text analysis with spaCy, but now we’ll be working on some Logistic Regression Classification using scikit-learn. To make this more realistic, we’re going to use a real-world data set—this set of Amazon Alexa product reviews.

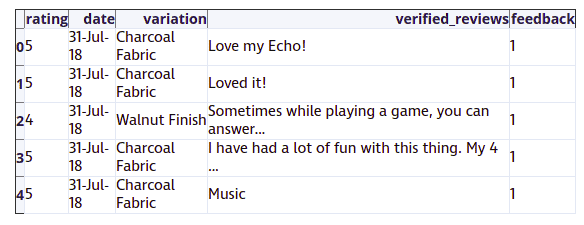

This data set comes as a tab-separated file (.tsv). It has five columns: rating, date, variation, verified_reviews, feedback.

rating denotes the rating each user gave the Alexa (out of 5). date indicates the date of the review, and variation describes which model the user reviewed. verified_reviews contains the text of each review, and feedback contains a sentiment label, with 1 denoting positive sentiment (the user liked it) and 0 denoting negative sentiment (the user didn’t).

This dataset has consumer reviews of amazon Alexa products like Echos, Echo Dots, Alexa Firesticks, etc. What we’re going to do is develop a classification model that looks at the review text and predicts whether a review is positive or negative. Since this data set already includes whether a review is positive or negative in the feedback column, we can use those answers to train and test our model. Our goal here is to produce an accurate model that we could then use to process new user reviews and quickly determine whether they were positive or negative.

Let’s start by reading the data into a pandas dataframe and then using the built-in functions of pandas to help us take a closer look at our data.

# Loading TSV file

df_amazon = pd.read_csv ("datasets/amazon_alexa.tsv", sep="\t")# Top 5 records

df_amazon.head()

# View data information

df_amazon.info()

Output: <class 'pandas.core.frame.DataFrame'> RangeIndex: 3150 entries, 0 to 3149 Data columns (total 5 columns): rating 3150 non-null int64 date 3150 non-null object variation 3150 non-null object verified_reviews 3150 non-null object feedback 3150 non-null int64 dtypes: int64(2), object(3) memory usage: 123.1+ KB# Feedback Value count

df_amazon.feedback.value_counts()Output: 1 2893 0 257 Name: feedback, dtype: int64

Tokening the Data with spaCy

Now that we know what we’re working with, let’s create a custom tokenizer function using spaCy. We’ll use this function to automatically strip information we don’t need, like stopwords and punctuation, from each review.

We’ll start by importing the English models we need from spaCy, as well as Python’s string module, which contains a helpful list of all punctuation marks that we can use in string.punctuation. We’ll create variables that contain the punctuation marks and stopwords we want to remove, and a parser that runs input through spaCy‘s English module.

Then, we’ll create a spacy_tokenizer() a function that accepts a sentence as input and processes the sentence into tokens, performing lemmatization, lowercasing, and removing stop words. This is similar to what we did in the examples earlier in this tutorial, but now we’re putting it all together into a single function for preprocessing each user review we’re analyzing.

import string

from spacy.lang.en.stop_words import STOP_WORDS

import spacy

# Create our list of punctuation marks

punctuations = string.punctuation

# stopwords

stop_words = STOP_WORDS

# Load English tokenizer, tagger, parser, NER and word vectors

nlp = spacy.load('en_core_web_sm')

# Creating our tokenizer function

def spacy_tokenizer(sentence):

# Creating our token object, which is used to create documents with linguistic annotations.

mytokens = nlp(sentence)

# Lemmatization and converting each token into lowercase

mytokens = [ word.lemma_.lower() for word in mytokens]

# Removing stop words and punctuations

mytokens = [ word for word in mytokens if word not in stop_words or word not in punctuations ]

# return preprocessed list of tokens

return mytokensDefining a Custom Transformer

To further clean our text data, we’ll also want to create a custom transformer for removing initial and end spaces and converting text into lower case. Here, we will create a custom predictors class wich inherits the TransformerMixin class. This class overrides the transform, fit and get_parrams methods. We’ll also create a clean_text() function that removes spaces and converts text into lowercase.

class predictors(TransformerMixin):

def transform(self, X, **transform_params):

# Cleaning Text

return [clean_text(text) for text in X]

def fit(self, X, y=None, **fit_params):

return self

def get_params(self, deep=True):

return {}

# Basic function to clean the text

def clean_text(text):

# Removing spaces and converting text into lowercase

return text.strip().lower()Vectorization Feature Engineering (TF-IDF)

When we classify text, we end up with text snippets matched with their respective labels. But we can’t simply use text strings in our machine learning model; we need a way to convert our text into something that can be represented numerically just like the labels (1 for positive and 0 for negative) are. Classifying text in positive and negative labels is called sentiment analysis. So we need a way to represent our text numerically.

One tool we can use for doing this is called Bag of Words. BoW converts text into the matrix of the occurrence of words within a given document. It focuses on whether given words occurred or not in the document, and it generates a matrix that we might see referred to as a BoW matrix or a document term matrix.

We can generate a BoW matrix for our text data by using scikit-learn‘s CountVectorizer. In the code below, we’re telling CountVectorizer to use the custom spacy_tokenizer function we built as its tokenizer and defining the ngram range we want.

N-grams are combinations of adjacent words in a given text, where n is the number of words that included in the tokens. for example, in the sentence “Who will win the football world cup in 2022?” unigrams would be a sequence of single words such as “who”, “will”, “win” and so on. Bigrams would be a sequence of 2 contiguous words such as “who will”, “will win”, and so on. So the ngram_range parameter we’ll use in the code below sets the lower and upper bounds of our ngrams (we’ll be using unigrams). Then we’ll assign the ngrams to bow_vector.

bow_vector = CountVectorizer(tokenizer = spacy_tokenizer, ngram_range=(1,1))We’ll also want to look at the TF-IDF (Term Frequency-Inverse Document Frequency) for our terms. This sounds complicated, but it’s simply a way of normalizing our Bag of Words(BoW) by looking at each word’s frequency in comparison to the document frequency. In other words, it’s a way of representing how important a particular term is in the context of a given document, based on how many times the term appears and how many other documents that same term appears in. The higher the TF-IDF, the more important that term is to that document.

We can represent this with the following mathematical equation:

Of course, we don’t have to calculate that by hand! We can generate TF-IDF automatically using scikit-learn‘s TfidfVectorizer. Again, we’ll tell it to use the custom tokenizer that we built with spaCy, and then we’ll assign the result to the variable tfidf_vector.

tfidf_vector = TfidfVectorizer(tokenizer = spacy_tokenizer)Splitting The Data into Training and Test Sets

We’re trying to build a classification model, but we need a way to know how it’s actually performing. Dividing the dataset into a training set and a test set the tried-and-true method for doing this. We’ll use half of our data set as our training set, which will include the correct answers. Then we’ll test our model using the other half of the data set without giving it the answers, to see how accurately it performs.

Conveniently, scikit-learn gives us a built-in function for doing this: train_test_split(). We just need to tell it the feature set we want it to split (X), the labels we want it to test against (ylabels), and the size we want to use for the test set (represented as a percentage in decimal form).

from sklearn.model_selection import train_test_split

X = df_amazon['verified_reviews'] # the features we want to analyze

ylabels = df_amazon['feedback']

# the labels, or answers, we want to test against

X_train, X_test, y_train, y_test = train_test_split(X, ylabels, test_size=0.3)Creating a Pipeline and Generating the Model

Now that we’re all set up, it’s time to actually build our model! We’ll start by importing the LogisticRegression module and creating a LogisticRegression classifier object.

Then, we’ll create a pipeline with three components: a cleaner, a vectorizer, and a classifier. The cleaner uses our predictors class object to clean and preprocess the text. The vectorizer uses countvector objects to create the bag of words matrix for our text. A classifier is an object that performs the logistic regression to classify the sentiments.

Once this pipeline is built, we’ll fit the pipeline components using fit().

# Logistic Regression Classifier

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression()

# Create pipeline using Bag of Words

pipe = Pipeline([("cleaner", predictors()),

('vectorizer', bow_vector),

('classifier', classifier)])

# model generation

pipe.fit(X_train,y_train)Output:

Pipeline(memory=None,steps=[('cleaner', <__main__.predictors object at 0x00000254DA6F8940>), ('vectorizer', CountVectorizer(analyzer='word', binary=False, decode_error='strict',dtype=<class 'numpy.int64'>, encoding='utf-8', input='content',lowercase=True, max_df=1.0, max_features=None, min_df=1,

...ty='l2', random_state=None, solver='liblinear', tol=0.0001, verbose=0, warm_start=False))])

Evaluating the Model

Let’s take a look at how our model actually performs! We can do this using the metrics module from scikit-learn. Now that we’ve trained our model, we’ll put our test data through the pipeline to come up with predictions. Then we’ll use various functions of the metrics module to look at our model’s accuracy, precision, and recall.

- Accuracy refers to the percentage of the total predictions our model makes that are completely correct.

- Precision describes the ratio of true positives to true positives plus false positives in our predictions.

- Recall describes the ratio of true positives to true positives plus false negatives in our predictions.

The documentation links above offer more details and more precise definitions of each term, but the bottom line is that all three metrics are measured from 0 to 1, where 1 is predicting everything completely correctly. Therefore, the closer our model’s scores are to 1, the better.

from sklearn import metrics

# Predicting with a test dataset

predicted = pipe.predict(X_test)# Model Accuracy

print("Logistic Regression Accuracy:",metrics.accuracy_score(y_test, predicted))

print("Logistic Regression Precision:",metrics.precision_score(y_test, predicted))

print("Logistic Regression Recall:",metrics.recall_score(y_test, predicted))Output: Logistic Regression Accuracy: 0.9417989417989417 Logistic Regression Precision: 0.9528508771929824 Logistic Regression Recall: 0.9863791146424518

In other words, overall, our model correctly identified a comment’s sentiment 94.1% of the time. When it predicted a review was positive, that review was actually positive 95% of the time. When handed a positive review, our model identified it as positive 98.6% of the time

Summary

Congratulations, you have made it to the end of this tutorial!

In this article, we have built our own machine learning model with scikit-learn. Of course, this is just the beginning, and there’s a lot more that both spaCy and scikit-learn have to offer Python data scientists.

This article is originally published at https://www.dataquest.io/blog/tutorial-text-classification-in-python-using-spacy/

Reach out to me on Linkedin: https://www.linkedin.com/in/avinash-navlani/