MLOps Tutorial

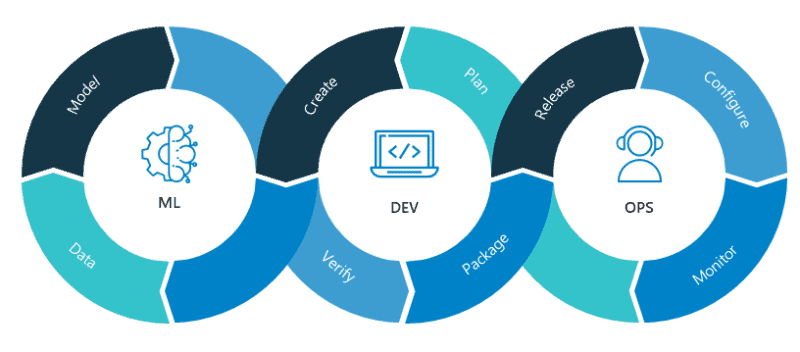

Machine Learning Operations (MLOps) is a multi-disciplinary field that combines machine learning and software development lifecycle. It is an overlap between machine learning and IT operations. MLOps observes the performance of machine learning models in production environments, detects the issues, and makes changes as needed. It requires a combination of skillsets such as Programming, Machine learning, Workflow management, Version control, CI/CD pipelines, Docker, Kubernetes, and cloud platforms.

The main objective of MLOps is to provide an end-to-end automated solution that includes data collection, feature engineering, model training, deployment, model retraining, and monitoring machine learning models in a production environment. The ultimate goal is to provide the large-scale, reliable, and secure deployment of ML models.

What is MLOps?

MLOps focuses on Machine learning, DevOps, and data engineering, which directs toward the building, deploying, and maintaining Machine learning models in a production environment efficiently. We can consider MLOps as the process of automating machine learning operations using DevOps techniques.

Difference between MLOps and DevOps

- DevOps facilitates a streamlined software development process for designing, testing, deploying, and monitoring any software application in production. MLOps facilitates a streamlined ML model development process for feature engineering, model training, model deployment, and monitoring of any ML application.

- DevOps automates the deployment of software applications to production in just a few minutes to maintain them reliably. Similarly, MLOps automates the deployment of ML models in production in a faster way.

- DevOps empowers various teams to execute their software applications in continuous integration and development mode while MLOps empowers data science and IT teams to connect, validate, and manage operations effectively.

- DevOps bridge the gap between the development and deployment of application by proving automatic code quality checks, tests, and reliable deployment. MLOps bridge the gap between model training and deployment in production by automated model retraining, hyperparameter tuning, model assessment, and computing model drift.

- DevOps extends the agile development process via building, testing, deploying, and delivering continuously. MLOps utilizes that approach in order to deploy and deliver ML solutions effectively.

- DevOps and MLOPs both focus on end-to-end process automation.

Why Should You Use MLOps?

Data Scientists perform various experiments in order to train production-ready ML models. Machine learning workflow needs tracking of experiments in order to develop the fine-tuned production model. ML Model development needs lots of experiments like model retraining, hyperparameter tuning, model assessment, model drift, and model deployment. Data Scientists track such details because small changes in the input data may affect the model performance. Logging the inputs and outputs of the ML model experiment will help quickly check what worked and what didn’t work for the model.

MLOPs Tools List

The main goal of MLOps is to develop and deploy Machine Learning (ML) models efficiently in a production environment. ML Model development needs lots of experiments like model retraining, hyperparameter tuning, model assessment, model drift, and model deployment. To track all of these things we need specialized tools. Here are some of the most common types of tools used in MLOps:

- Track ML Experiments: For experimentation purposes, we can use tools such as MLlow, ClearML, Neptune, Weights & Biases, and Comet. MLFlow and ClearML are full machine learning life cycle tools. Weights and Bias tracks and visualize the experiments. Neptune is an experiment tracker and model registry.

- Containerization: For containerization purposes, we can use tools such as Docker, Kubernetes, and other cloud services such as AKS(Azure Kubernetes Service), GKE(Google Kubernetes Engine), and Amazon EKS. Both Docker and Kubernetes are containerization tools used for automating deployment, scaling, and management of containerized applications.

- CI/CD Pipeline: Fo CI/CD purposes, we can use tools such as Jenkins, CircleCI, and GitLab. Jenkins is an open-source continuous integration and delivery tool. GitLab is a complete platform for version control, issue tracking, code review, and CI/CD.

- Monitoring: For monitoring purposes, we can use Fiddler and great expectations. Fiddler is a machine learning model monitoring and Great expectations is a data monitoring tool.

- Workflow Management Tool: For managing workflows, we can use Airflow and Luigi. Airflow is used for scheduling and managing workflows. Luigi is used for complex data pipelines for batch jobs.

These are just a few categorical examples of MLOps tools. In general, I will recommend based on my experience you can focus on MLFlow, Docker, Kubernetes, CircleCI/Jenkins, and Airflow. Because these are widely used in various organizations.

Summary

Finally, we can conclude MLOps is the most critical area that helps companies to integrate the machine learning/data science solution to deploy efficiently. It ensures effective deployment and monitoring. MLOps provide the large-scale, reliable, and secure deployment of ML models in order to reduce the overall model production process time. It also monitors the performance of Machine learning models in the production environment.

Stay tuned for upcoming articles on MLOps. We will soon write detailed articles on each topic.