Apache Airflow: A Workflow Management Platform

Apache Airflow is a workflow management platform that schedules and monitors the data pipelines.

We can also describe airflow as a batch-oriented framework for building data pipelines. Airflow helps us build, schedule, and monitor the data pipelines using the python-based framework. It captures data processes activities and coordinates the real-time updates to a distributed environment. Apache Airflow is not a data processing or ETL tool it orchestrates the various tasks in the data pipelines. The data pipeline is a set of several tasks that need to be executed in a given flow with dependencies to achieve the main objective.

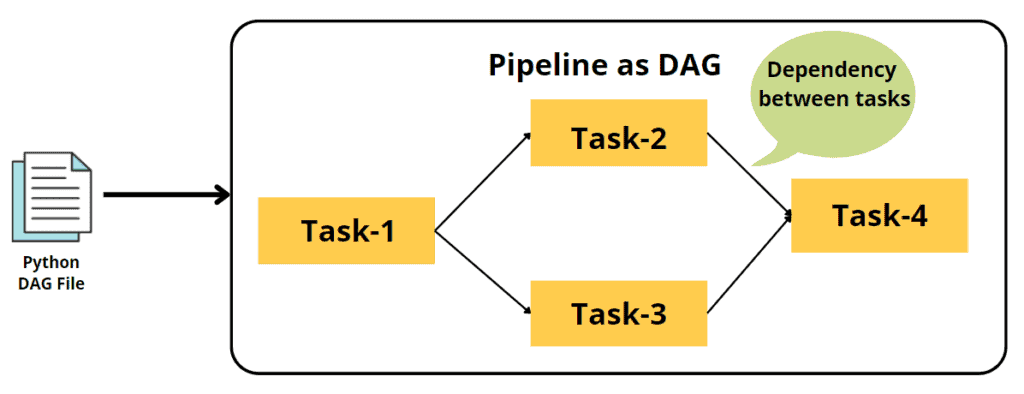

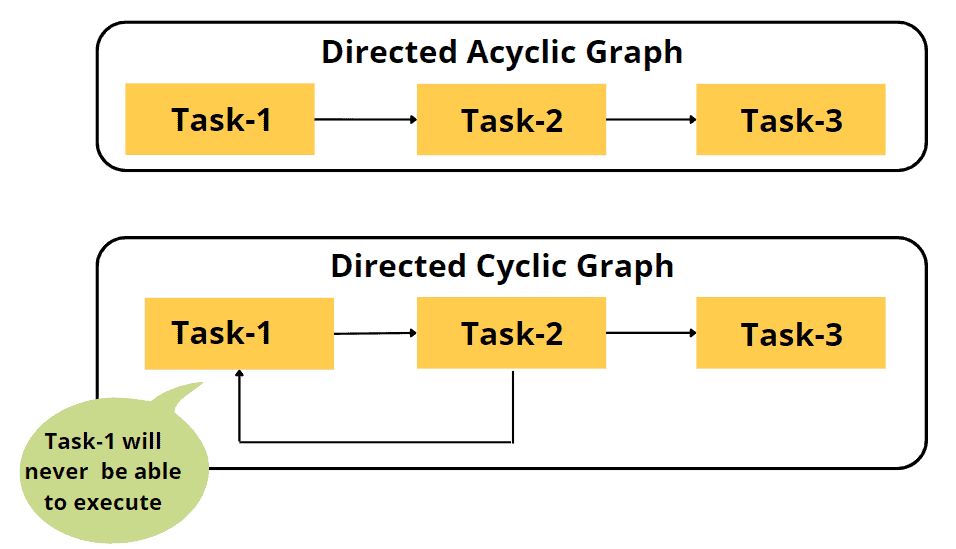

We can introduce the dependencies between tasks using graphs in the data pipeline. In graph-based solutions, tasks are represented as nodes and dependencies as directed edges between two tasks. This directed graph may lead to a deadlock situation so it is necessary to make it acyclic. Airflow uses Directed Acyclic Graphs (DAGs) to represent a data pipeline that efficiently executes the tasks as per graph flow.

In this tutorial, we will explore the concepts of Data Pipelines and Apache Airflow. We will focus on DAGs and Airflow Architecture. Also, we will discuss when to use and when not to use the Airflow.

In this tutorial, we are going to cover the following topics:

Data Pipelines

The data pipeline consists series of tasks for data processing. It consists of three key components: source, processing tasks or steps, and sink(or destination). Data pipelines allow the flow of data between applications such as databases, data warehouses, data lakes, and cloud storage services. A data pipeline is used to automate the data transfer and data transformation between a source and sink repository. The data pipeline is the broader term for moving data between systems and ETL is the kind of data pipeline. Data Pipelines help us to deal with complex data processing operations.

Apache Airflow

In this world of information, organizations are dealing with different-different workflows for collecting data from multiple sources, preprocessing data, uploading data, and reporting. Workflow management tools help us to automate all those operations in a scheduled manner. Apache Airflow is one of the workflow management platforms for scheduling and executing complex data pipelines. Airflow uses Directed Acyclic Graphs (DAGs) to represent a data pipeline that efficiently executes the tasks as per graph flow.

Features of Apache Airflow:

- It is an Open-Source tool.

- It is a Batch-oriented framework.

- It uses Directed Acyclic Graph(DAG) for creating workflows.

- Web Interface for monitoring pipeline.

- Python-based tool

- Scheduling data pipelines.

- Robust Integrations with cloud platforms.

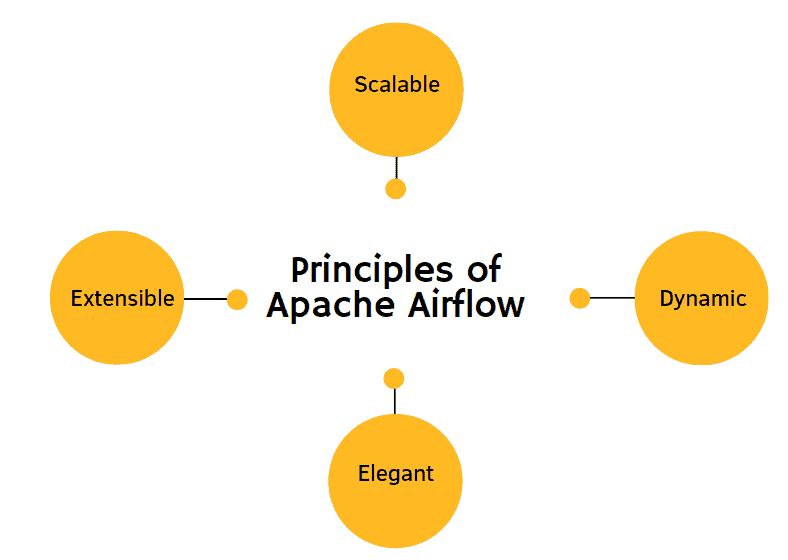

Principles of Apache Airflow

- Extensible: In Airflow, we can define operators and extend libraries.

- Scalable: In Airflow, we can scale it to infinity.

- Dynamic: In Airflow, we can execute the pipelines dynamically.

- Elegant: In Airflow, we can create lean and explicit pipelines.

Why Directed Acyclic Graphs?

Directed Acyclic Graph (DAG) comprises directed edges, nodes, and no loop or cycles. Acyclic means there are no circular dependencies in the graph between tasks. Circular dependency creates a problem in task execution. for example, if task-1 depends upon task-2 and task-2 depends upon task-1 then this situation will cause deadlock and leads to logical inconsistency.

Data Pipeline Execution in Airflow

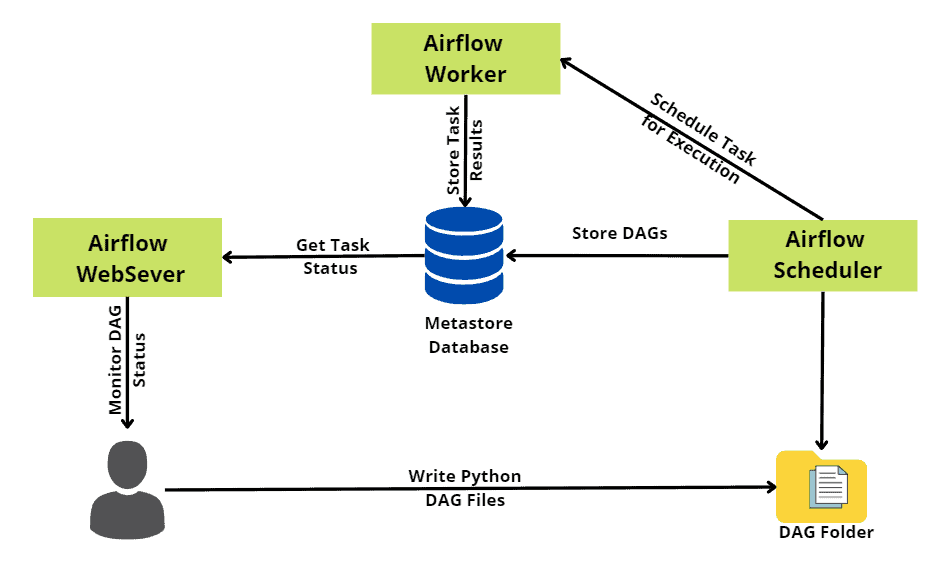

In Airflow, we can write our DAGs in python and schedule them for execution at regular intervals such as every minute, every hour, every day, every week, and so on. Airflow is comprising four main components:

- Airflow scheduler: It parses the DAGs and schedules the task as per the scheduled time. After scheduling, it submits the task for execution to Airflow workers.

- Airflow workers: It selects the scheduled tasks for execution. Workers are the main engine that is responsible for performing the actual work.

- Airflow webserver: It visualizes the DAGs on the UI web interface and monitors the DAG runs.

- Metadata Database – It is used for storing the pipeline task status.

When to use Airflow?

- Implement the data pipelines using Python.

- Scheduling pipelines at regular intervals.

- Backfilling allows us to easily reprocess the historical data.

- Interactive Web Interface for monitoring pipelines and debugging failures.

When not to use Airflow?

- It cannot be deployed with Streaming Data Pipelines.

- Highly Dynamic Pipelines (It has the capability to deal with dynamic pipelines but not highly dynamic)

- No knowledge of programming language.

- Complex for larger use-cases

- Does not support data lineages and data versioning features.

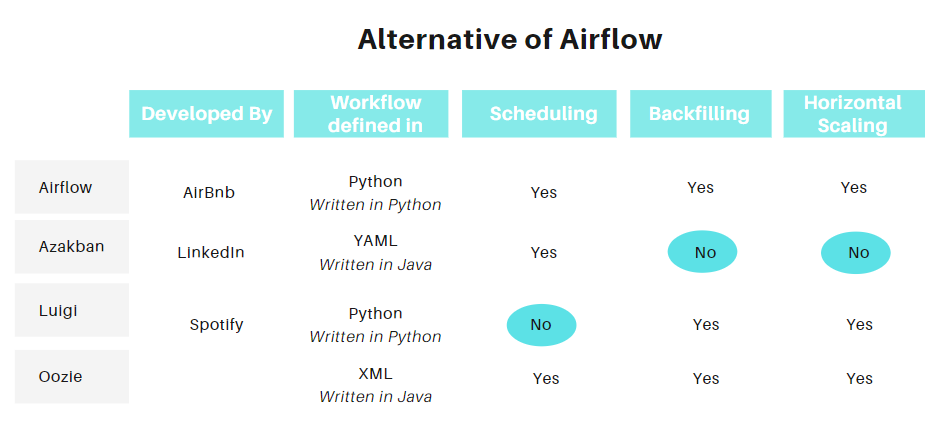

Alternatives to Airflow

Some of the other alternatives for airflow are Argos, Conductor, Make, Nifi, Metaflow, and Kubeflow.

Summary

In Apache Airflow, data pipelines are represented as Directed Acyclic Graph that defines tasks at nodes and dependencies using directed edges. Airflow offers various features such as open-source, batch-oriented, and python-based workflow management. It has main three components scheduler, workers, and webserver. All these three components coordinate and execute data pipelines with a real-time monitoring feature.

In the upcoming tutorials, we will try to focus on Airflow Implementation, Operators, Templates, and Semantic Scheduling. You can also explore Big Data Technologies such as Hadoop and Spark on this portal.