Text Analytics for Beginners using Python spaCy Part-2

In the previous article on text analytics for beginners using Python part-1, we’ve looked at some of the cool things spaCy can do in general. We have seen what is natural language processing (NLP)? Then we have seen text analytics basic operations for cleaning and analyzing text data with spaCy. In this article, we will learn other important topics of NLP: entity recognition, dependency parsing, and word vector representation using spaCy.

Entity Detection

Entity detection, also called entity recognition, is a more advanced form of language processing that identifies important elements like places, people, organizations, and languages within an input string of text. This is really helpful for quickly extracting information from text since you can quickly pick out important topics or identify key sections of text.

Let’s try out some entity detection using a few paragraphs from this recent article. We’ll use .label to grab a label for each entity that’s detected in the text, and then we’ll take a look at these entities in a more visual format using spaCy‘s displaCy visualizer.

# importing the model en_core_web_sm of English for vocabluary, syntax & entities

import en_core_web_sm

# load en_core_web_sm of English for vocabluary, syntax & entities

nlp = en_core_web_sm.load()

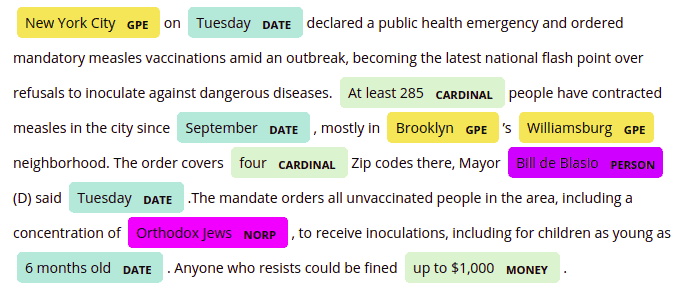

nytimes= nlp(u"""New York City on Tuesday declared a public health emergency and ordered mandatory measles vaccinations amid an outbreak, becoming the latest national flash point over refusals to inoculate against dangerous diseases.At least 285 people have contracted measles in the city since September, mostly in Brooklyn’s Williamsburg neighborhood. The order covers four Zip codes there, Mayor Bill de Blasio (D) said Tuesday.The mandate orders all unvaccinated people in the area, including a concentration of Orthodox Jews, to receive inoculations, including for children as young as 6 months old. Anyone who resists could be fined up to $1,000.""")

entities=[(i, i.label_, i.label) for i in nytimes.ents]

print(entities)Output: [(New York City, 'GPE', 384), (Tuesday, 'DATE', 391), (At least 285, 'CARDINAL', 397), (September, 'DATE', 391), (Brooklyn, 'GPE', 384), (Williamsburg, 'GPE', 384), (four, 'CARDINAL', 397), (Bill de Blasio, 'PERSON', 380), (Tuesday, 'DATE', 391), (Orthodox Jews, 'NORP', 381), (6 months old, 'DATE', 391), (up to $1,000, 'MONEY', 394)]

Using this technique, we can identify a variety of entities within the text. The spaCy documentation provides a full list of supported entity types, and we can see from the short example above that it’s able to identify a variety of different entity types, including specific locations (GPE), date-related words (DATE), important numbers (CARDINAL), specific individuals (PERSON), etc.

Using displaCy we can also visualize our input text, with each identified entity highlighted by color and labeled. We’ll use style = "ent" to tell displaCy that we want to visualize entities here.

#for visualization of Entity detection importing displacy from spacy:

from spacy import displacy

displacy.render(nytimes, style = "ent",jupyter = True)Output:

Dependency Parsing

Dependency parsing is a language processing technique that allows us to better determine the meaning of a sentence by analyzing how it’s constructed to determine how the individual words relate to each other.

Consider, for example, the sentence “Bill throws the ball.” We have two nouns (Bill and ball) and one verb (throws). But we can’t just look at these words individually, or we may end up thinking that the ball is throwing Bill! To understand the sentence correctly, we need to look at the word order and sentence structure, not just the words and their parts of speech.

Doing this is quite complicated, but thankfully spaCy will take care of the work for us! Below, let’s give spaCy another short sentence pulled from the news headlines. Then we’ll use another spaCy called noun_chunks, which breaks the input down into nouns and the words describing them, and iterate through each chunk in our source text, identifying the word, its root, its dependency identification, and which chunk it belongs to.

# importing the model en_core_web_sm of English for vocabluary, syntax & entities

import en_core_web_sm

# load en_core_web_sm of English for vocabluary, syntax & entities

nlp = en_core_web_sm.load()

# "nlp" Object is used to create documents with linguistic annotations.

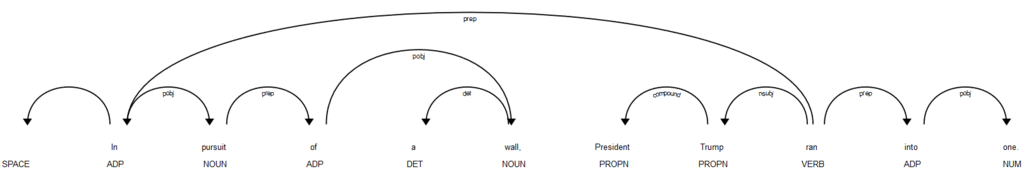

docp = nlp ("In pursuit of a wall, President Trump ran into one.")

for chunk in docp.noun_chunks:

print(chunk.text, chunk.root.text, chunk.root.dep_, chunk.root.head.text)Output: pursuit pursuit pobj In a wall wall pobj of President Trump Trump nsubj ran

This output can be a little bit difficult to follow, but since we’ve already imported the displaCy visualizer, we can use that to view a dependency diagram using style = "dep" that’s much easier to understand:

#for visualization of Entity detection importing displacy from spacy:

from spacy import displacy

displacy.render(docp, style="dep", jupyter= True)Output:

Of course, we can also check out spaCy‘s documentation on dependency parsing to get a better understanding of the different labels that might get applied to our text depending on how each sentence is interpreted.

Word Vector Representation

When we’re looking at words alone, it’s difficult for a machine to understand connections that a human would understand immediately. Engine and car, for example, have what might seem like an obvious connection (cars run using engines), but that link is not so obvious to a computer.

Thankfully, there’s a way we can represent words that captures more of these sorts of connections. A word vector is a numeric representation of a word that communicates its relationship to other words.

Each word is interpreted as a unique and lengthy array of numbers. You can think of these numbers as being something like GPS coordinates. GPS coordinates consist of two numbers (latitude and longitude), and if we saw two sets of GPS coordinates that were numerically close to each other (like 43,-70, and 44,-70), we would know that those two locations were relatively close together. Word vectors work similarly, although there are a lot more than two coordinates assigned to each word, so they’re much harder for a human to eyeball.

Using spaCy‘s en_core_web_sm model, let’s take a look at the length of a vector for a single word, and what that vector looks like using .vector and .shape.

# import en_core_web_sm small spacy model

import en_core_web_sm

# load en_core_web_sm of English for vocabluary, syntax & entities

nlp = en_core_web_sm.load()

# "nlp" Object is used to create documents with linguistic annotations.

mango = nlp(u'mango')

print(mango.vector.shape)Output: (96,) [ 1.0466383 -1.5323697 -0.72177905 -2.4700649 -0.2715162 1.1589639 1.7113379 -0.31615403 -2.0978343 1.837553 1.4681302 2.728043 -2.3457408 -5.17184 -4.6110015 -0.21236466 -0.3029521 4.220028 -0.6813917 2.4016762 -1.9546705 -0.85086954 1.2456163 1.5107994 0.4684736 3.1612053 0.15542296 2.0598564 3.780035 4.6110964 0.6375268 -1.078107 -0.96647096 -1.3939928 -0.56914186 0.51434743 2.3150034 -0.93199825 -2.7970662 -0.8540115 -3.4250052 4.2857723 2.5058174 -2.2150877 0.7860181 3.496335 -0.62606215 -2.0213525 -4.47421 1.6821622 -6.0789204 0.22800982 -0.36950028 -4.5340714 -1.7978683 -2.080299 4.125556 3.1852438 -3.286446 1.0892276 1.017115 1.2736416 -0.10613725 3.5102775 1.1902348 0.05483437 -0.06298041 0.8280688 0.05514218 0.94817173 -0.49377063 1.1512338 -0.81374085 -1.6104267 1.8233354 -2.278403 -2.1321895 0.3029334 -1.4510616 -1.0584296 -3.5698352 -0.13046083 -0.2668339 1.7826645 0.4639858 -0.8389523 -0.02689964 2.316218 5.8155413 -0.45935947 4.368636 1.6603007 -3.1823301 -1.4959551 -0.5229269 1.3637555 ]

There’s no way that a human could look at that array and identify it as meaning “mango,” but representing the word this way works well for machines because it allows us to represent both the word’s meaning and its “proximity” to other similar words using the coordinates in the array.

Resources and Next Steps

Congratulations, you have made it to the end of this tutorial!

In this tutorial, we have gone entity recognition, entity recognition, dependency parsing, and word vector representation with spaCy. In the next article, we will build our own machine learning model with scikit-learn and perform text classification.

Text Classification using Python spaCy

This article is originally published at https://www.dataquest.io/blog/tutorial-text-classification-in-python-using-spacy/

Reach out to me on Linkedin: https://www.linkedin.com/in/avinash-navlani/