Apache Sqoop

In this tutorial, we will focus on the data ingestion tool Apache Sqoop for processing big data.

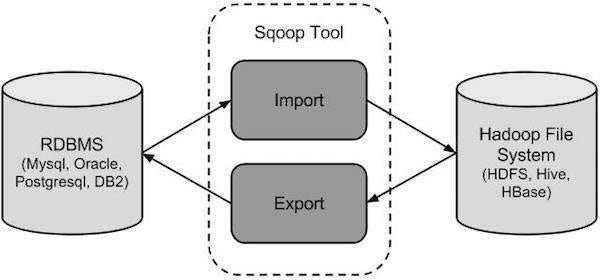

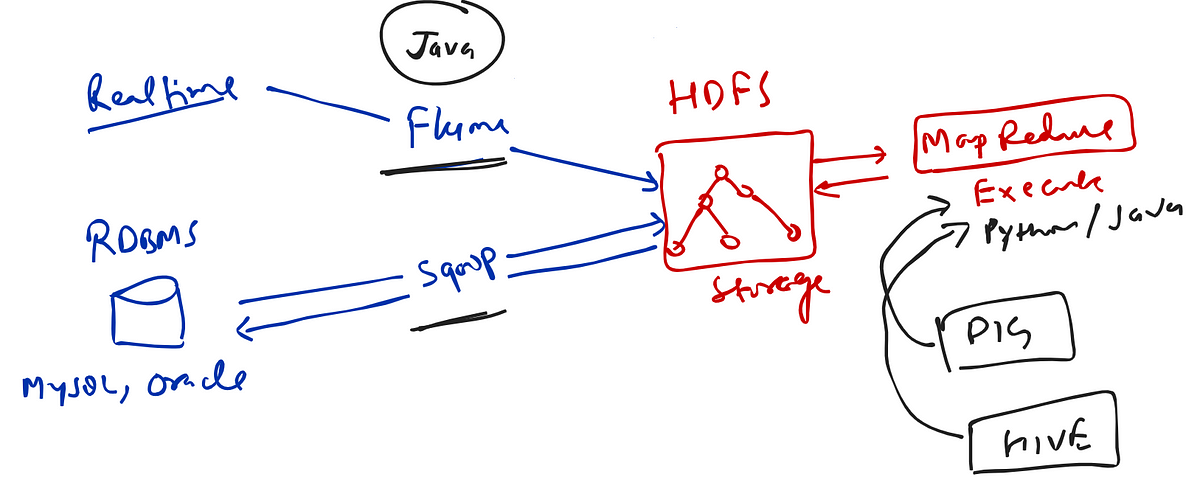

Most of the web application portals store in Relation databases. These relational databases are the most common source for data storage. We need to transfer this data into the Hadoop system for analysis and processing purposes for various applications. Sqoop is a data ingestion tool that is designed to transfer data between RDBMS systems(such as Oracle, MySQL, SQL Server, Postgres, Teradata, etc) and Hadoop HDFS.

Sqoop stands for — “SQL to Hadoop & Hadoop to SQL”. It is developed by Cloudera.

Why do we need Sqoop?

Before Sqoop, the developer needs to write the MapReduce program to extract and load the data between RDBMS and Hadoop HDFS. This will cause the following problems:

- Loading data from heterogeneous sources makes it challenging.

- Maintaining data consistency also becomes challenging.

- Loading bulk data also cause various challenges.

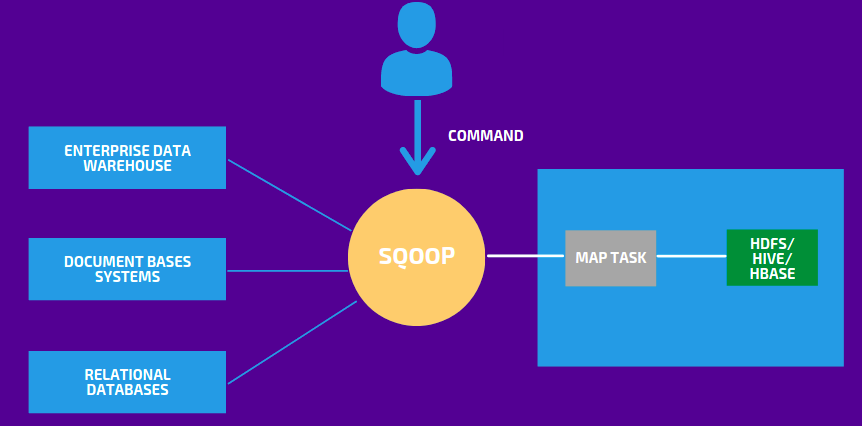

Sqoop makes it easier for the developer by providing CLI commands for data import and export. The developer needs to provide the basic required details such as source, destination, type of operations, and user authentication. It converts command from CLI to Map-Reduce Job.

Sqoop Features

- It is a kind of data ingestion tool.

- It is not a complete ETL tool, it only performs extraction and loading.

- It performs full load as well as incremental load.

- It performs parallel import and export using the YARN framework.

- It can directly import the SQL query results to HDFS.

- It can integrate with Kerberos Security.

- It can directly load the data into HIVE and HBase.

- It can compress the data using deflate(gzip) algorithm.

Sqoop Architecture

- Submit Command: Sqoop command for import/export the data.

- Fetch Data: Sqoop fetches the data from different-different sources such as Enterprize Data Warehouse, Relational Databases, Document-Based Systems.

- Execute Mapper: Sqoop runs the multiple mappers for loading/exporting the data to or from HDFS, Hive, HBase.

Sqoop Import and Export Operations

Create a Database in RDBMS(MySQL):

- Start MySQL in Cloudera Quickstart Image

[cloudera@quickstart ~]$ mysql -uroot -pcloudera - See the databases in MySQL using show databases commands.

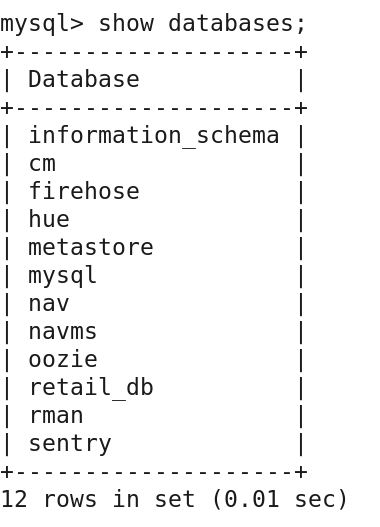

mysql> show databases;

Here, you will see a retail_db database. It means we already have a few default databases. It means there is no need to create the database, we can use the available database. In our example, we are using retail_db.

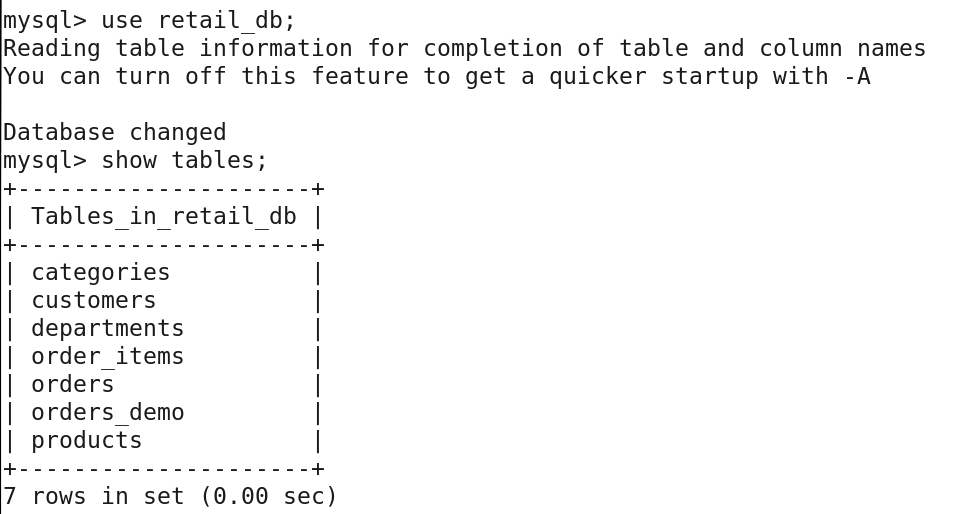

3. Show tables in retail_db using show tables statement.

Select retail_db databases using the use statement.

mysql> use retail_db;

mysql> show tables;

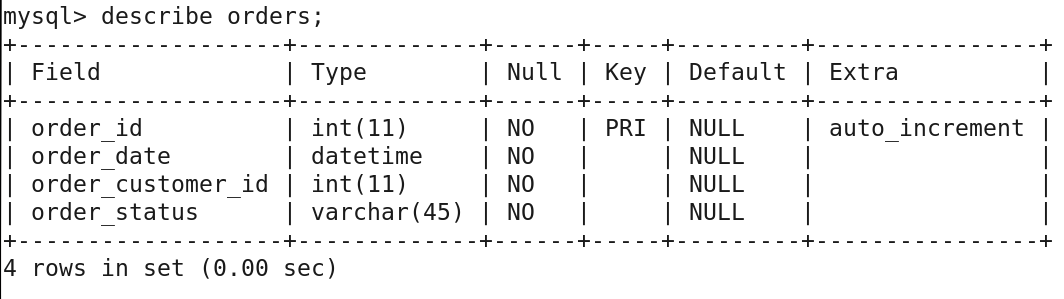

4. Check the schema of the orders table using describe command.

mysql> describe orders;

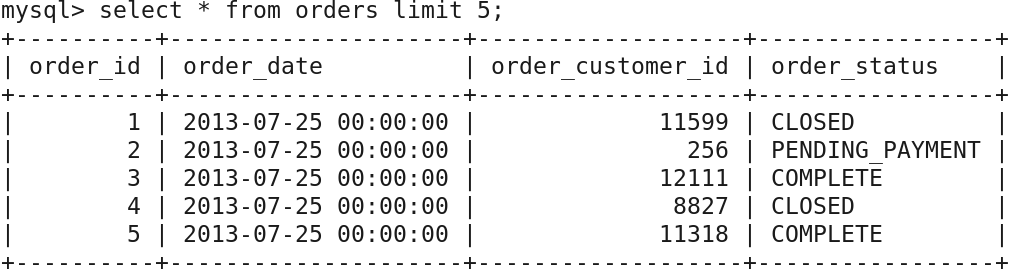

5. You can also see the records of orders table:

mysql> select * from orders limit 5;

In the sub-section, we have worked on the MySQL database and explored the orders table in retail_db databases.

Let’s jump to Apache Sqoop

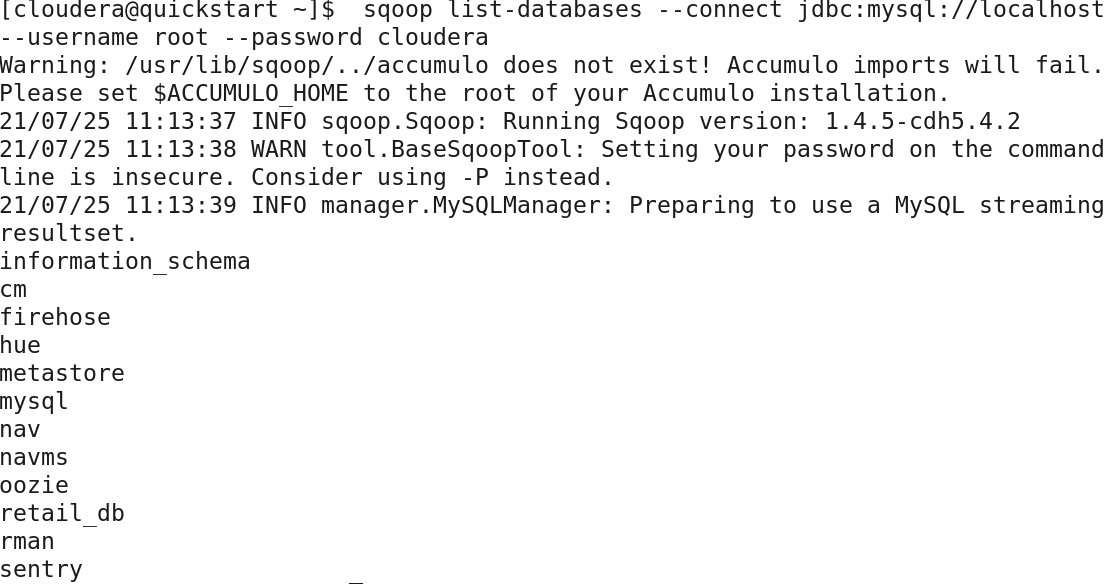

- We can list all the databases of MySQL RDBMS from sqoop using the following command:

[cloudera@quickstart ~]$ sqoop list-databases — connect jdbc:mysql://localhost — username root — password cloudera

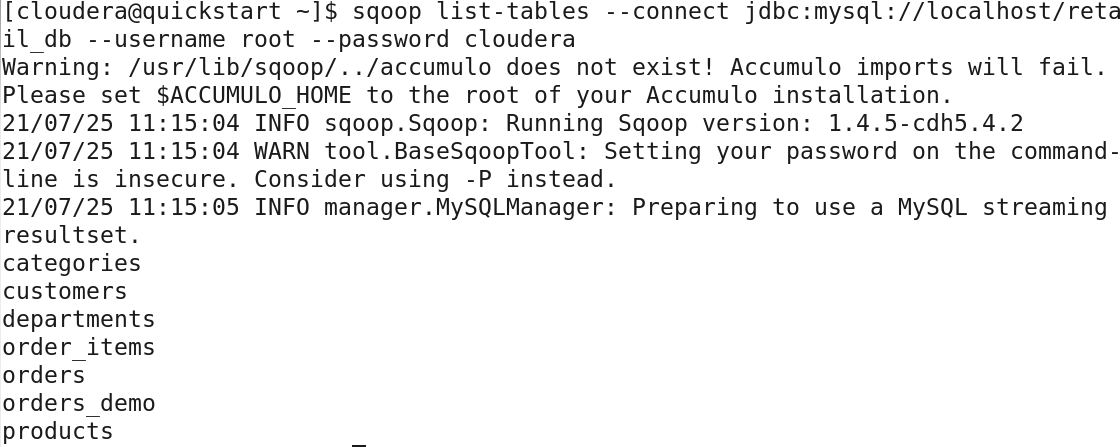

- We can list all the tables from a MySQL retail_db database sqoop using the following command:

[cloudera@quickstart ~]$ sqoop import — connect jdbc:mysql://localhost/retail_db — table orders — username root — password cloudera

Import Data from MySQL to HDFS

- Import the data from retail_db database orders table:

[cloudera@quickstart ~]$ sqoop import — connect jdbc:mysql://localhost/retail_db — table orders — username root — password cloudera - You can check the dataset using the following cat command:

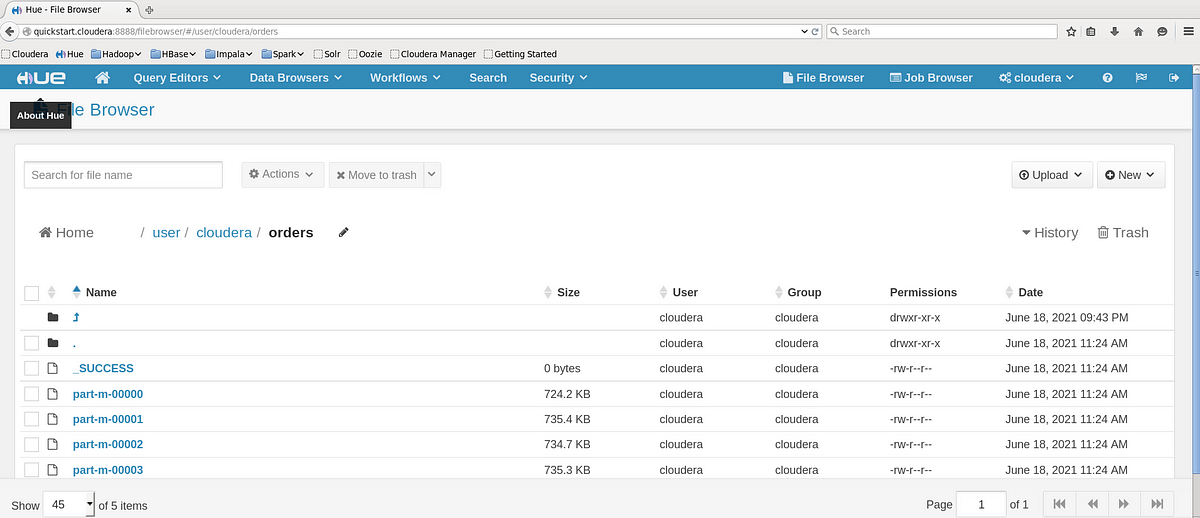

[cloudera@quickstart ~]$ hdfs dfs -cat /user/cloudera/orders/part-m-* - You can also check the dataset in Hue: HUE>>File Browser>>/ user/ cloudera/ orders

Export Data from HDFS to MySQL

- Create a table in MySQL:

[cloudera@quickstart ~]$ mysql -uroot -pcloudera

- Change Database

mysql> use retail_db - Create a table:

- Export into the orders_demo table to

[cloudera@quickstart ~]$ sqoop export — connect jdbc:mysql://localhost/retail_db — table orders_demo — username root — password cloudera — export-dir /user/cloudera/orders

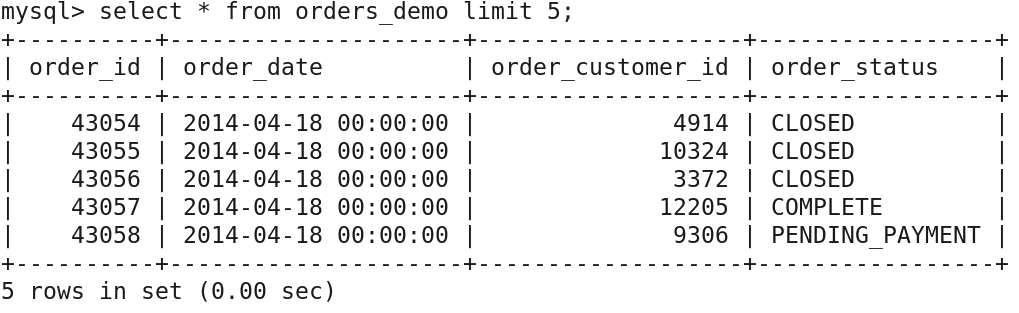

- You can check the data in MySQL table orders_demo using Select command:

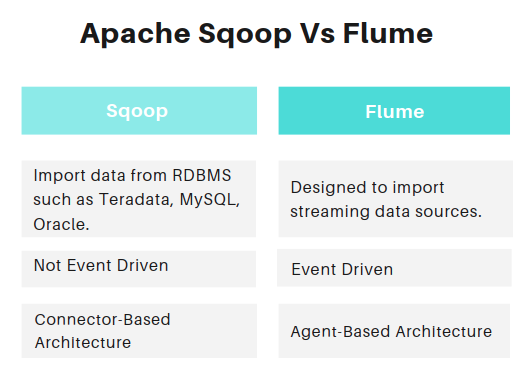

Comparison Between Flume vs Sqoop

Summary

In this tutorial, we have discussed Apache Sqoop, its need, features, and architecture. Also, We have practiced the Sqoop Commands and performed various operations such as Import data from MySQL to HDFS, Export data from HDFS to MySQL. We have also compared the Sqoop with Flume.